Date:

16/12 2010 - 17/12 2010

Duration of activity:

9 hours + 3 hours

Group members participating:

Frederik, Christian & Lasse

Goals:

To investigate the accelerometer, and how a program with an accelerometer and gyro sensor will make the robot balance.

Plan:

1. Integrate the Bluetooth framework in the main application

2. Get the accelerometer mounted

3. Be able to receive accelerometer sensor inputs and do experiments

4. Do experiments with combining accelerometer and gyro sensor

5. Refactor the overall software achitecture

5. Refactor the overall software achitecture

Results:

1. Bluetooth integration

The code for the Bluetooth communication created in the last session was migrated to the main application without any complications.

The code for the Bluetooth communication created in the last session was migrated to the main application without any complications.

2. Accelerometer mounting

The accelerometer is a 3-axis accelerometer from HiTechnic [1] which will help us to determine if the robot is upright or not. The range of measurement is from +2g to -2g and the axes are defined as shown on Figure 1.

Figure 1 - The axes definition of the accelerometer

We mounted the accelerometer with the x-axis facing down along the side of the robot. We did this to make sure, that the accelerometer was aligned with the vertical axis of the robot. We also moved the gyro sensor along the side to make it symmetric. An image of this is shown on Figure 2.

Figure 2 - The robot with accelerometer and gyro sensor

3. Accelerometer experiments

We used the lejos.nxt.addon.TiltSensor [2] class for reading sensor values. It is possible to get two values for each axis:

- getTilt() - gets the tilt value where 200 corresponds to 1g

- getAcceleration() - acceleration measured in mg (g = acceleration due to gravity = 9.81 m/s^2)

Initially we wrote a test-program to get an idea of how the accelerometer worked and how it would react. Besides this we found out that it was a good idea to use one axis (X) to measure the acceleration, and another axis (Y) to measure the direction of this acceleration. This was because the values of the changes of the X-axis had the same sign indifferent of which direction the accelerometer was turned in. The Y-axis on the other hand went into overflow around the balancing point, allowing us to use this as an indicator of the direction the robot was toppling over. Unfortunately we did not get a graph of this, but with some willingness it is possible to get a notion of this behaviour in Figure 3, where the red graph is the X-axis, the yellow graph is the Y-axis, and the green graph is the combined data.

Figure 3 - Accelerometer readings:

red: x-axis tilt

yellow: y-axis tilt

green: derived x-axis tilt

Figure 4 - Accelerometer readings:

red: x-axis tilt

yellow: y-axis tilt

green: derived x-axis tilt

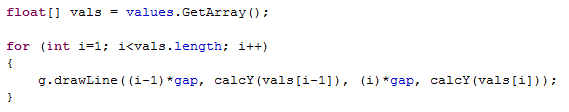

We found out that the reason for the spikes were that our regulation thread and Bluetooth thread read the sensor simultaneously. We solved this by adding a façade class to the accelerometer sensor, and thereby making a centralized access the accelerometer.4. Combining accelerometer and gyro sensor

There are not a lot of leJOS projects combining accelerometer and gyro values on the internet, but we found one, which is done by a guy named Andy Shaw. He has implemented a leJOS balancing robot [3] by using a homebuilt accelerometer and the gyro sensor from HiTechnic. The code is rather complex because it uses a Kalman filter [4] for joining the values from the accelerometer and the gyro and thereby eliminating the drift from the gyro. Because of the level of complexity we decided to leave the project for later experiments and try an alternate, and simpler, approach.

Our strategy is to use the gyro sensor for regulation and accelerometer for observing if the robot has reached the set point. So when the set point is reached the gyro angle is set to 0 to eliminate the drift.

Initially we used the method TiltSensor.getTilt() to read the state of the accelerometer. After some experiments we found that the data returned from getTilt() was too coarse-grained, meaning that the accelerometer would suggest that the robot was at set point in too big of an area around this point. Realizing this we used the method TiltSensor.getAccel() instead. This method returned data with a higher resolution than getTilt(), which decreased the area in which the accelerometer suggested set point. This, however, introduced a different problem to the accelerometer readings; fluctuating output.

In order to cancel out the noise in the accelerometer we implemented a running average mechanism. After experimenting with different ranges of values we concluded that it made the robot too unresponsive so we needed another strategy to handle the accelerometer properly.

5. Code refactoring

When we ran the program based on the code by Anette et al. we actually got a worse performance than in Session 2. We found out that the source of error originated in the program structure. We had a number of seven threads running including Bluetooth communication so we decided to simplify our architecture by decreasing the number of threads to two. The resulting architecture is illustrated on Figure 5.

Figure 5 - UML class diagram showing the new and improved architecture

When designing the architecture, alternative architectures were considered. One possibility was a subsumption architecture [5,7] where there is a single thread for each behaviour and thereby a decentralized sensing. This solution was not chosen because our goal wasn't to support different behaviours, furthermore the different behaviours combined with Bluetooth communication can be as CPU intensive that it makes balancing impossible [5].

Conclusion:

The new architecture caused a better performance but we still had problems telling the robot when it is upright because of the performance of accelerometer. After doing experiments with the accelerometer and the built-in leJOS class TiltSensor, we can conclude that the getTilt() method is too inaccurate to measure exactly if the robot is upright. The getAccel() method has contrary to getTilt() a relatively high resolution, but the high resolution reveals the oscillation in the accelerometer. The noise in the accelerometer has caused a lot of experiments ranging from a simple running average to home made filters. All of those failed and clearly showed that the accelerometer isn't capable of balancing the robot without assistance from another sensor.

References:

[1] HiTechnic NXT Acceleration Sensor - https://www.hitechnic.com/cgi-bin/commerce.cgi?preadd=action&key=NAC1040

[2] TiltSensor class, Session 3 - http://lejos.sourceforge.net/p_technologies/nxt/nxj/api/lejos/nxt/TiltSensor.html

[3] Andy Shaw, Lego projects - http://www.gloomy-place.com/legoindex.htm

[4] Wikipedia, Kalman filter - http://en.wikipedia.org/wiki/Kalman_filter

[5] Rodney Brooks, A robust layered control system for a mobile robot, IEEE Journal of Robotics and Automation, RA-2(1):14-23, 1986[6] Marvin Project, Lab report 5 - Bluetooth controlled robot - http://wiki.aasimon.org/doku.php?id=marvin:ecp5

[7] Subsumption Architecture, Wikipedia - http://en.wikipedia.org/wiki/Subsumption_architecture

[8] L., Rasmussen, F., Laulund & C., Jensen, Session 2 source code on NXT, http://dl.dropbox.com/u/2389829/Lego/Session%203/Session%203%20NXT%20source%20code.zip

[9] L., Rasmussen, F., Laulund & C., Jensen, Session 2 source code on PC, http://dl.dropbox.com/u/2389829/Lego/Session%203/Session%203%20PC%20source%20code.zip,