Date:

9/12 2010

Duration of activity:

7.5 hours

Group members participating:

Frederik, Christian & Lasse

Goals:

A. Allow for runtime adjustment of robot parameters such as PID-values, and to collect data/feedback from the robot.

A. Allow for runtime adjustment of robot parameters such as PID-values, and to collect data/feedback from the robot.

B. Download the code from the Marvin-project to the robot, and make the necessary adjustments in order to make the robot balance.

Plan:

1. Acquire yet another NXT brick, and install leJOS on it.

2. Examine and prepare the Marvin-project to be downloaded to the robot (goal B).

3. Establish Bluetooth connection between NXT brick and computer (goal A).

2. Examine and prepare the Marvin-project to be downloaded to the robot (goal B).

3. Establish Bluetooth connection between NXT brick and computer (goal A).

4. Implement PC application with GUI for runtime adjustment of parameters and presentation of robot vitals (goal A),

Results:

1. Acquire another NXT

We were able to borrow another NXT brick from the library at the Engineering College of Aarhus. The brick contained original Lego firmware, so we uploaded the leJOS firmware to brick to be able to upload leJOS programs. This made it possible for us to work more efficiently when dealing with simultaneous tasks.2. The Marvin project

We discovered that the Marvin group used version 0.8 of leJOS [3] because in version 0.85, which we use, the method getActualSpeed() in the Motor class no longer exists. This was a problem because we couldn't run their program without modifying their code. We replaced getActualSpeed() with getSpeed() because the documentation had similar descriptions for both methods. When running their code we received fault values for both motor power and the gyro sensor which kept rising. We therefore decided to ignore the tacho counter in their code to eliminate potential motor errors concerning the replacement of getActualSpeed() and instead focus on the gyro sensor. We modified their GyroscopeSensor class to use an ADSensorPort instead of SensorPort and added a call to the method setTypeAndMode() and we saw more promising results from the gyro sensor:

To make sure the different versions were not the main source of error we rolled back to version 0.8. This caused some trouble for Eclipse and we were not allowed to upload any programs, our theory is incompatibility between firmware versions. After having no luck with different firmware versions we decided to give up the experiments with Marvin and instead focus on the project done by Annette et al. in hope that they used version 0.85 of leJOS.

The Annette project

To be able to balance the robot with the code from Annette et al. we needed to have a Bluetooth connection running between the NXT and a PC. We modified their code so we didn't have to use Bluetooth just to test their algorithm. Besides that we modified the project with the two classes, NewGyroSensor [4] and GyroSejway [5], which fitted our needs better. We tweaked the parameters of these classes to observe the effect of the different parameters. We merged the running average on the offset from Marvin with our existing gyro class but we only noticed a slight performance improvement. This ended up being the final result for this session - code is found in the classes NewGyroSensor and GyroSejway.

A video of the final result of the day can be seen below:

Video 1 - Final result

3. Bluetooth connection

To be able to adjust parameters for use in robot logic, we want to implement a PC application that via Bluetooth can send and receive data to/from the NXT-brick. Ideally the robot should connect to the PC on start up, hereby acting as master in the communication. This would mean that we did not need to initiate the connection via the PC application but merely wait for connection from NXT. Via the leJOS API it should be possible to initiate a connection from the NXT brick via the static functions of the Bluetooth-Class:

To be able to adjust parameters for use in robot logic, we want to implement a PC application that via Bluetooth can send and receive data to/from the NXT-brick. Ideally the robot should connect to the PC on start up, hereby acting as master in the communication. This would mean that we did not need to initiate the connection via the PC application but merely wait for connection from NXT. Via the leJOS API it should be possible to initiate a connection from the NXT brick via the static functions of the Bluetooth-Class:

Unfortunately the NXT brick is not able to act as master in a Bluetooth communication. We were not even able to pair the NXT and the PC manually via the leJOS menu on the NXT. Maybe this was a problem with our specific brick or the PC we tried to connect to. Though by trying to manually pair the newly acquired NXT brick, we got the same result - unsuccessful. Also the PC we tried to connect to, was the same as we (via Bluetooth) load code onto the NXT from.

We decided not to go any further with this problem, and accept that the connection must be established from the PC-side, and thereby requiring our attention on connection (click). The NXT will then act as slave and wait for connection from the PC upon start.

The NXT part of the connection is implemented as follows:

Another array of problems was presented to us in this process, since leJOS does not allow a class to be named "BluetoothConnection", as this is a reserved name. Also "BTConnection" is a reserved class name. This was very unfortunate as these two exact names was the first two names we used for our class. This lead to quite a bit of confusion since the error-messages were not self-explanatory.

Also in order to use Bluetooth on NXT, the 3rd party leJOS library "bluecove" had to be included in the project build path. Bluecove can be located in the leJOS NXJ subfolders.

The data reception/sending is implemented in a single thread with reception as highest priority. This means that data will only be sent if there is no data ready to be read on the connection.

The sleep between each run of the Bluetooth thread logic must be determined with regard to load on NXT calculation power. The Bluetooth connection should not compromise the main functionality of the robot. This will be a matter that we must pay attention to on a later occasion.

4. PC application

Since the NXT was not able to act as master in the Bluetooth connection, the PC application had to have a connection button which initiates the Bluetooth connection. As soon as the connection is established, values for the calibration parameters in the robot logic can be entered, and sent via the GUI.

In order to monitor the sensor values and vitals (ex. calculated regulation power) of the robot, the PC application constantly waits for data from the Bluetooth connection. We considered whether these values should be saved and stored in a file for later investigation. But the best feature for us, would be a mean of live monitoring of sensor values and robot vitals. Therefore we wanted to present the received values in a graph, with the appearance of an EKG and its likes.

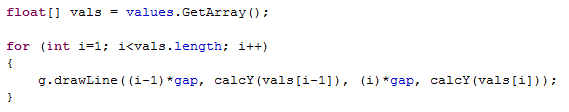

Since there is no standard implementation of a such in the java.awt [6] or Swing [7] we decided to implement this on our own. We implemented a class CustomGraph [8] that stores values in a circular buffer (also self-implemented) in order to store the same amount of values all the time. With every update of the CustomGraph, the values are read from the buffer, and each measurement is presented as a line drawn via the Graphics.drawLine() function. The CustomGraph class had to extend the JPanel in order to allow for Graphics manipulation.

Video 2 shows the result of the GUI application:

Video 2 - GUI application

Conclusion:

Getting the sample code running was way more difficult than expected because of differences in leJOS API and firmware versions. This caused us not to use the Marvin project as a starting point and instead using the project done by Annette et al.

With this software loaded on to the robot it was possible to see that the robot attempted to balance. It was, however, not fully up to the task - although it was pretty close. Some of the trouble with getting it to balance was due to difficulties in calibrating the robot, but the main trouble was with drifting of the gyro. During some of the longer balancing sessions, it was possible to see how the gyrometer suffered from drift, as the robot began to tilt, as time moved on.

We experienced that the gyrometer is quite sensitive to blows. By tapping the robot either in the front or in the back, it was possible to counteract the drifting a bit. The harder the blow or the closer to the gyrometer the "impact" occurs, the bigger the influence. A bit of a tedious method for eliminating drift, but it is a nice thing to know.

With this software loaded on to the robot it was possible to see that the robot attempted to balance. It was, however, not fully up to the task - although it was pretty close. Some of the trouble with getting it to balance was due to difficulties in calibrating the robot, but the main trouble was with drifting of the gyro. During some of the longer balancing sessions, it was possible to see how the gyrometer suffered from drift, as the robot began to tilt, as time moved on.

We experienced that the gyrometer is quite sensitive to blows. By tapping the robot either in the front or in the back, it was possible to counteract the drifting a bit. The harder the blow or the closer to the gyrometer the "impact" occurs, the bigger the influence. A bit of a tedious method for eliminating drift, but it is a nice thing to know.

The PC application and the Bluetooth communication has been implemented and works as intended. It is possible to send PID and scale values to the robot and receive sensor values and robot vitals from the robot. By implementing the graph and thereby showing the received data from the robot runtime we should be able to better select the correct values for the PID calculation. This will be a good tool for our experimentation with other balancing methods in future sessions.

The code for this session is found here: NXT [9] and PC [10].

The code for this session is found here: NXT [9] and PC [10].

References:

[1] Johnny Rieper et al., "Marvin NXT", http://wiki.aasimon.org/doku.php?id=marvin:marvin

[2] Annette et al., "Balancing robot", http://annettesamuelrasmus.blogspot.com/

[3] leJOS version overview, http://sourceforge.net/projects/lejos/files/lejos-NXJ-win32/

[4] NewGyroSensor Java class, http://dl.dropbox.com/u/2389829/Lego/Session%202/NewGyroSensor.java

[5] GyroSejway Java class, http://dl.dropbox.com/u/2389829/Lego/Session%202/GyroSejway.java

[6] Java AWT - http://java.sun.com/products/jdk/awt/

[7] Java Swing - http://java.sun.com/products/jfc/tsc/articles/architecture/

[8] CustomGraph Java class, http://dl.dropbox.com/u/2389829/Lego/Session%202/CustomGraph.java

[9] L., Rasmussen, F., Laulund & C., Jensen, Session 2 source code on NXT, http://dl.dropbox.com/u/2678729/Lego/code/Session%202%20NXT%20source%20code.zip

[10] L., Rasmussen, F., Laulund & C., Jensen, Session 2 source code on PC, http://dl.dropbox.com/u/2678729/Lego/code/Session%202%20PC%20source%20code.zip

[2] Annette et al., "Balancing robot", http://annettesamuelrasmus.blogspot.com/

[3] leJOS version overview, http://sourceforge.net/projects/lejos/files/lejos-NXJ-win32/

[4] NewGyroSensor Java class, http://dl.dropbox.com/u/2389829/Lego/Session%202/NewGyroSensor.java

[5] GyroSejway Java class, http://dl.dropbox.com/u/2389829/Lego/Session%202/GyroSejway.java

[6] Java AWT - http://java.sun.com/products/jdk/awt/

[7] Java Swing - http://java.sun.com/products/jfc/tsc/articles/architecture/

[8] CustomGraph Java class, http://dl.dropbox.com/u/2389829/Lego/Session%202/CustomGraph.java

[9] L., Rasmussen, F., Laulund & C., Jensen, Session 2 source code on NXT, http://dl.dropbox.com/u/2678729/Lego/code/Session%202%20NXT%20source%20code.zip

[10] L., Rasmussen, F., Laulund & C., Jensen, Session 2 source code on PC, http://dl.dropbox.com/u/2678729/Lego/code/Session%202%20PC%20source%20code.zip